Is the Riemann hypothesis true?

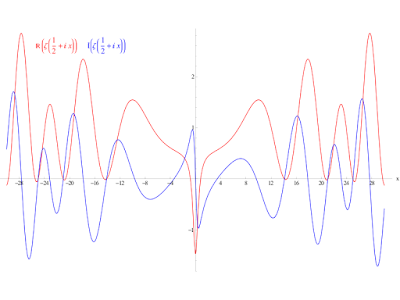

In 2001, Jeffrey Lagarias proved that the Riemann hypothesis is equivalent to the following statement ( proof here ): `\sigma(n) <= \H_n + \ln(\H_n)*\exp(\H_n)` with strict inequality for `n > 1`, where `\sigma(n)` is the sum of the positive divisors of `n`. In 1913, Grönwall showed that the asymptotic growth rate of the sigma function can be expressed by: `\lim_{n to \infty}\frac{\sigma(n)}{\n \ln\ln n} = \exp(\gamma)` where lim is the limit superior . Relying on this two theorems, we can show that: `lim_{n to \infty}\frac{\exp(\gamma) * n \ln \ln n}{\H_n + \ln(\H_n) * \exp(\H_n)} = 1` with strict inequality for each `1 < n < \infty` (see Wolfram|Alpha ): `\exp(\gamma) * n \ln \ln n < \H_n + \ln(\H_n) * \exp(\H_n)` If the Riemann hypothesis is true, then for each `n ≥ 5041`: `\sigma(n) <= \exp(\gamma) * n \ln \ln n` By using the usual definition of the `\gamma` constant: `\gamma = \lim_{n to \infty}(\H_n - \ln n)`